Ensemble learning is a powerful technique for improving the performance of any model. Its performance is so fascinating that it has and is used in many competitive programming for example kaggle competition. So, let’s see what and how of it.

What is ensemble learning?

The idea of ensemble learning is simple, instead of relying on and using one model for a problem, it uses multiple models for a single problem and each output or prediction is made on the basis of votes from each model. The value with majority votes is elected as the final output

For example, if we are doing cat and dog classification with 10 models and 7 of them classified a data point as Cat then the final output will be a cat as the value has the majority of votes.

Also read –> What is Transfer learning, and why it is the next driver of ML success (According to Andrew Ng)

How ensemble learning works?

In ensemble learning, we take the help of multiple models. These models in ensemble learning could be different (like a combination of KNN, linear regression, decision tree) or the same (like all can be linear regression models) and can have any number of them. Also, we can create ensemble models for any problem like regression and classification.

Just like normal model training we train each individual model on a different sample of data. Which are extracted randomly from the training data. These individual models are called weak models as they hold high chances of making mistakes. After the training process, we use them on test data, and the output of each data is collected from each model. Those are passed to the final model which is called strong models as the chances of making mistakes are pretty low for it, as compared to individuals.

And in the final step, it concludes the final output based on each individual model’s output. If the model is for a regression problem then it takes the average of those output values. Or in the case of the classification problem, it checks the class with a maximum vote (number of predictions of each class from each model) and makes it the final output.

Also read –> Complete understanding of deep learning for beginners without math

Why do we use ensemble learning?

To understand this let’s take an example, suppose after exploring some posts on Facebook, you got confused about the shape of Earth and you want to know if the Earth is flat or not.

So, You asked your friend about it and he gave you his answer. The chances of your friend’s answer being wrong is pretty high as he may not have a clear understanding of it as well. So, you randomly asked multiple people about it and you noted down their answer. After this survey you choose the answer which had the highest vote and conclude your answer i.e, Earth is flat, just kidding XD.

Similarly, to reduce the chances of getting wrong answers or predictions we just don’t rely on the answer of one model and we use ensemble learning

Category of ensemble learning

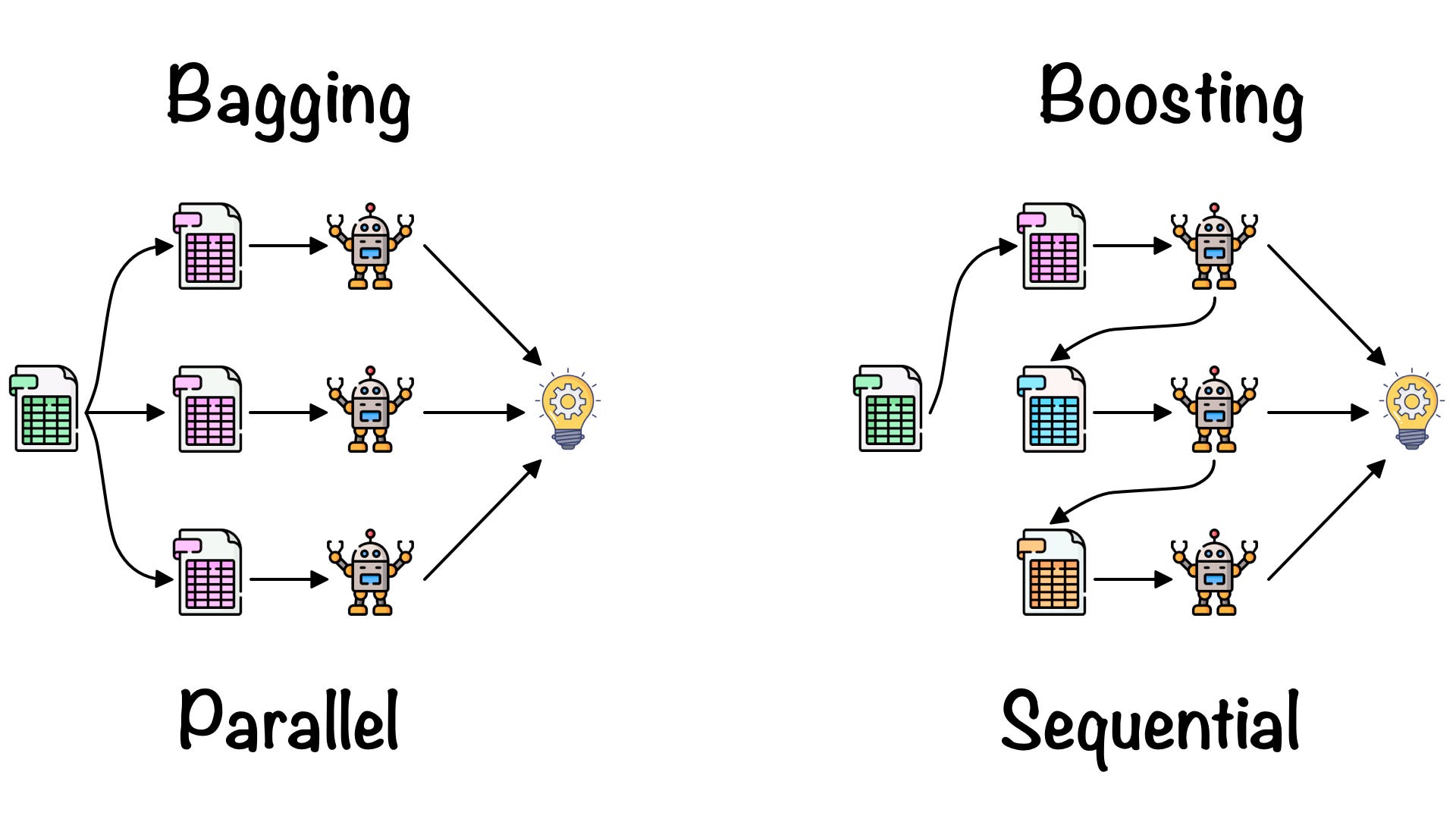

Ensemble learning is of two categories: parallel ensemble learning and sequential ensemble learning. And the type of ensemble methods mainly used is bagging and boosting.

Difference between parallel and sequential ensemble learning

Parallel Ensemble learning

In parallel ensemble learning base learners are generated in parallel format, to encourage independence between the base layer. The independence of base learners reduces the error slightly, due to the application of averages.

These include homogeneous ensembles (in which the same base learning algorithm is used to train base estimators) such as bagging and random forests and heterogeneous ensemble methods (in which different base learning algorithms are used to train base estimators) such as stacking.

Sequential Ensemble learning

While in sequential ensemble learning base learners are generated in sequential format, to encourage the dependence between the base learners. The model’s performance is improved by assigning higher weights to previously misrepresented learners. Examples include boosting.

If you want to know more about it then you can read it here

Challenges of ensemble learning

Apart from providing better accuracy, ensemble learning also have some downside, which are

- Take much more time

- Require extra memory

- Lack of decision explainability

Summary

Ensemble learning is one of the cool techniques which improve the performance of a model significantly. Instead of using a single model for prediction, ensemble learning uses multiple models, which decreases the chances of error or error rate. Also, it can be used for both regression and classification. But despite performance improvement, it also holds some downside like time, memory, and explainability issue

So, that was all about Ensemble learning, hope you liked this article 🙂

Data Scientist with 3+ years of experience in building data-intensive applications in diverse industries. Proficient in predictive modeling, computer vision, natural language processing, data visualization etc. Aside from being a data scientist, I am also a blogger and photographer.

-

Aman Kumarhttps://buggyprogrammer.com/author/buggy5454/

-

Aman Kumarhttps://buggyprogrammer.com/author/buggy5454/

-

Aman Kumarhttps://buggyprogrammer.com/author/buggy5454/

-

Aman Kumarhttps://buggyprogrammer.com/author/buggy5454/