NLP aka Natural language processing is one of the hot topics in Artificial intelligence, which is helping AI in understanding and communicating with us. Today in this article we will learn about NLP, why we need it, how it started, how it works, some common terminologies, the application of NLP, and many more. Hope you will enjoy it 😊.

What is Natural language processing?

Natural language processing also known as NLP is a subfield of Linguistics, Computer science and Artificial intelligence, used for dealing with textual data. NLP is an attempt to give AI an ability of understanding, processing, and generating written and spoken(auditory) data in a similar way a human does.

Natural language processing helps process and extract meaningful insight from the human language’s textual or spoken data, it does with the help of computational linguistics (rule-based modeling of human language), statistics, machine learning, and deep learning. In particular, it’s a subfield of AI that studies and programs computers to process and analyze large amounts of natural language data.

Why we need Natural language processing?

So now we know what NLP is, but why do we even need it? Okay before answering that let’s see the importance of textual and auditory data. The most important factor which made us the most intelligent species on Earth is because of the ability to share our thoughts & knowledge, and we were able to share our thoughts because we were able to communicate with the complex language we developed. And in today’s world, we have produced lots of data in textual and audio form and it keeps increasing constantly. A nice example would be social media, have you ever reached the bottom of Facebook/Instagram/twitter’s news feed no right! (This fact is only valid for non-programmers XD)

One more reason I would like to give you is, as our technology is improving we humans are getting lazier and for any task, we rely on machines, which is both good and bad. And if a machine will not be able to understand and talk in our language, then it won’t perform well right! So, for that reason, it is very important for a machine to be able to understand and talk with us in our language.

Imagine talking with Siri in her language(binary language), well still easier than Chinese! XD

So to process this we need something faster than us, which obviously are machines. But they don’t know our language right so we came with a software/program called NLP. NLP with the help of linguistics (a study of language science) and other tools can process our language.

Also, read -> Everything about deep learning without math for beginners

History of Natural language processing

Interesting isn’t it! Now you might be thinking, is it new technology or is it old? Actually, NLP has been around with us for more than 70 years from now. It originated from the idea of Machine Translation during the second world war in 1940. The primary idea was to convert one human language to another which then led to the idea of human to machine language translation.

Let’s see it’s timeline

- 1940 → The idea of language translation was developed which led to the thought of Human to machine language translation

- 1950 → In 1950 Alan Turing came up with an article titled “Computing Machinery and Intelligence” which is now known as “Turing Test”. Which states that if a machine can participate in a conversation with humans without being detected as a machine then it will be considered as a machine with human Intelligence.

Also, The Georgetown experiment in 1954 involved a fully automatic translation of more than sixty Russian sentences into English. His claim was that within the next 3 to 5 years (1953 – 1955) they will be able to solve the problem of translation. But according to the ALPAC report in 1966, it didn’t go as planned which drastically reduced the funding for the machine translation project. - 1957 → Noam Chomsky’s Syntactic Structures released a revolutionized book “Syntactic Structures” on Linguistics with ‘universal grammar’, a rule-based system of syntactic structures. Which later helped on NLP translation aka rule-based translation

- 1960 → Some successful NLP systems were developed like SHRDLU (a natural language system working in restricted “blocks worlds” with restricted vocabularies) and ELIZA (a simulation of a Rogerian psychotherapist). Without using almost no thoughts and emotion of a human, ELIZA sometimes surprised with his human-like interaction.

- 1970 → During this time many chatterbots were written for eg. Parry

- 1980 → Machine learning algorithms were introduced for natural language processing, before 1980 most of the NLP were rule-based (handwritten). This was due to both the steady increase in computation power and the gradual decrease of the dominance of Noam Chomsky’s theories e.g. transformational grammar.

- 1990 → In machine translation many notable successes on statistical methods in NLP occurred and probabilistic and data-driven models had become quite normal.

- 2000 → Due to the increase in the availability of huge amounts of data (due to web growth), research was also focused on supervised and unsupervised which helped in learning from the combination of hand-annotated and data that has not been hand-annotated with the desired answers.

- 2010 → Representation learning and Deep learning methods started implementing in NLP and this is the current progress in NLP.

Application of Natural language processing

Ever talked to Siri (a virtual assistant by Apple), it’s a great example of NLP. Siri takes your voice command and converts it into a text query then understands it through the NLP and responds to you back after converting the result into audio. Well, this is not the only use case of NLP, apart from this it also has lots of applications, let’s see some of them!

Also, read -> What is docker and why we need it?

- Spam detection → Spam detection used to separate malicious or unwanted emails/messages from important emails/messages. A famous example is google’s ‘Gmail’.

- Sentiment analysis → It used to analyze the positive or negative speech of any document/review/rating. For example, Facebook and other big social media use this to remove hate speech and other harmful content from their platform

- Chatbot → You must have this a lot in websites and applications. It helps to automate the commonly asked query by the users. This is used by many big companies and brands

- Speech Recognition → Speech recognition is used to process auditory data like Siri processes your voice command and then responds to you back with the result.

- Machine Translation → It is used to translate a text or document from one language to another, for example ‘Google Translate’

- Spell Checking → Spell check help to spot or auto-correct the misspelled text from a sentence or a document for example if you have used Grammarly which detects the misspelled text and corrects grammar.

- Helping Disabled people’s → NLP is helping many peoples who lost their voice, by giving him/her second voice

There’s many more exciting applications of NLP which you can explore with a simple google search.

How Natural language processing works?

Natural language processing, or NLP, mainly explores two big ideas, first there are natural language understanding which understands or extracts meanings from the combination of letters, this AI filter spam in your inbox, figures out if the amazon search for apple was grocery or computer shopping, instruct your Siri that which song you want to play

Secondly, there is natural language generation which generates language from knowledge, this AI performs translation, summarize documents, synthesis music or chat with you. The key to both problems is to understand the meaning of a word, which is a bit tricky. So, let’s understand how these sentences are processed to help NLP understand them better.

To help understand any sentence or document, we need to performs the following steps to a sentence

- Tokenization → We separate each individual word, punctuation marks, etc from a sentence/phrase/document, this process is known as Tokenization. These all separated words from a text or documents are together called bags of words. So, remember “tokenization” is a term for the process of separating words while “bag of words” is the term for its output.

- Stop words → After separating each word from the sentence we remove the word or element which do not add any value to the sentence like, numbers, punctuation marks, URLs, and stop words (for example, in English, “the”, “is” and “and”,). This not only saves processing power but also increases model accuracy significantly.

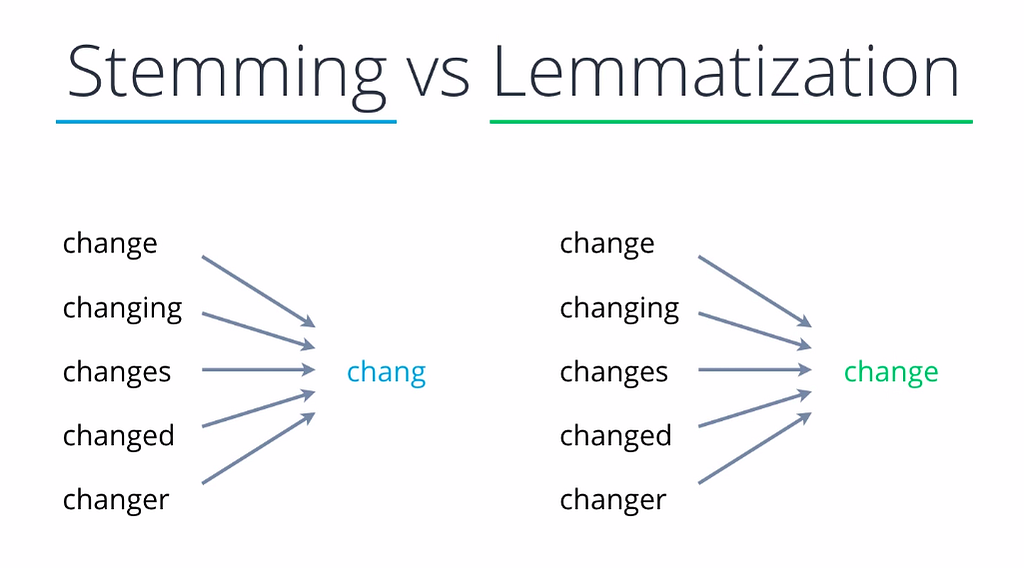

- Stemming/Lemmatization → Words like “eating”, “eat”, “ate” or “better”, “good”, “best” are forms of the same word “eat” and “good”. This different form of a word actually means the same at some level and doesn’t add some extra value to a sentence. Keeping this increases the processing power and decreases the accuracy so we convert it to its root word like for running we convert it back to run.

This thing is done with the help of stemming and Lemmatization. The difference between the two is that stemming removes the last few characters from the words which many times returns meaningless words (incorrect output), but is still used and the reason is it is fast and takes low processing power. While on the other hand, Lemmatization convert the word to its root form by following the grammar rule so it is mostly correct and return high accuracy but since it checks and analyzes grammar rule for each and every word, it takes extra time

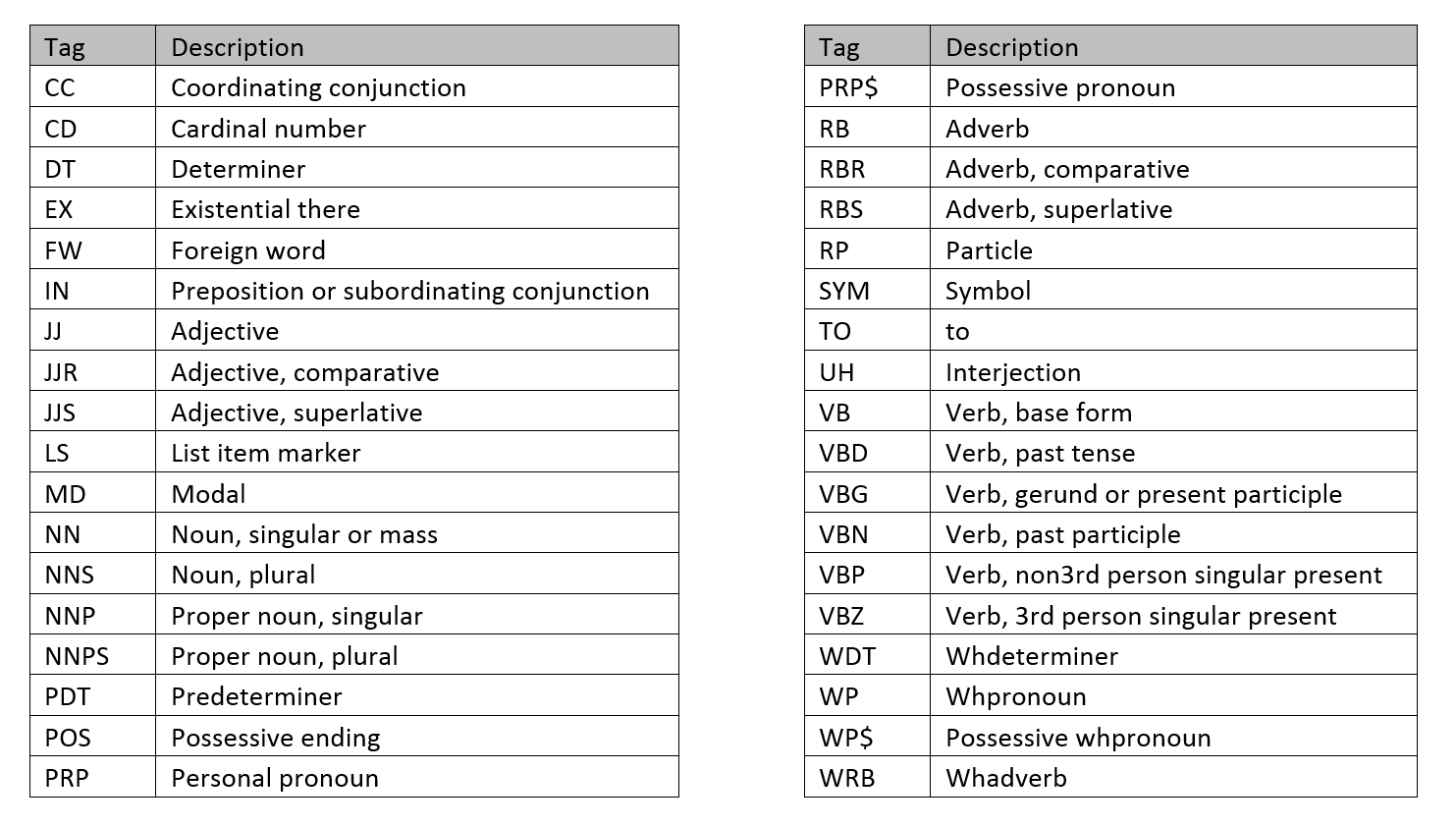

- POS Tagging (Parts of Speech tagging) → Parts of speech tagging, also known as grammatical tagging or word category disambiguation. It’s used to categorize words in a text or a document to their corresponding parts of speech based on both its definition and its context (with its adjacent and related words in a phrase). For example, see the image below.

[Img: Post tagging example with the tag short form eg NN etc]

Okay, that’s fine but why do we even need pos tagging? It’s because in many cases a word could have a different meaning and identifying the correct meaning of the word is very important for NLP, for machine translation, speech recognition, question answering, etc. for eg see these two sentences below:

- She saw a bear

- Your efforts will bear fruit

Here in both sentences, we encounter the same word “bear” but its meaning is different in both right! And if we consider the same meaning in both sentences then it’ll ruin the meaning. So, it’s important to understand the meaning of a word based on its definition and its context. I guess you get the idea! Or if you want to learn more about it then you can read this article

Here is the all POS Tag you will see in nlp

- Extracting information → After performing all the previous steps (pre-processing), we finally head to extract meaningful insight from the text or a document. These all steps are performed with the help of a library called “nltk”, it’s a python library (for other languages there will be other libraries, but the process will remain the same) which helps in all text pre-processing and information extraction.

Common Terminologies in Natural langauge processing

- Corpus or corpora → It is a term used for the collection of text such as product review, conversation, etc.

- Lexicons → It contains the meaning or vocabulary of different words, you can think of it as your dictionary.

- Word embedding → It is a term used for the representation of words for text analysis, basically, it is a mathematical representation of words as a vector created by analyzing a corpus or document and representing each word, phrase, or entire document as a vector in a high-dimensional space.

- Word2vector → It is a technique for creating such word embeddings

- N-grams → It is basically an “n” numbers of sequence of the word for example a single word “run” we call it “uni-gram” (not 1-gram) we use Latin numerical prefixes, and for two words it is “bi-gram” for 3-word “tri-grams, and for four words “Fri-gram”, Nah! just kidding XD. We sometimes use English numerical prefixes like “four-gram” and “five-gram”

- Normalization → It is all about putting text in a standard format, for example putting all text in either upper or lower case or transforming similar text like “gooood” or “gud” to “good”.

- Named Entity Recognition → It helps you easily identify key elements of the text like names of people, places, brands, etc.

- Transformers → It is an architecture introduced in 2017which solve sequence – to – sequence tasks while handling long-range dependencies with ease for example see the image below,

the highlighted words, how it was able to relate or predict that we are talking about “Griezmann” and put the pronoun and related word accordingly. It sure is easy for us but not for the machine and Transformers outperforming all the prior benchmarks of NLP tasks.

Challenges of NLP

No matter how far we have come in the journey of NLP, we still are facing many issues in natural language processing. This is because of many reasons like tone variation around the globe, mistakes in grammar or using non-existing words (all have built their own language 😂), Irony, and sarcasm.

Here are the list of some common challenges in NLP

- Contextual words and phrases and homonyms

- SynonymsIrony and sarcasm

- Ambiguity

- Errors in text or speech

- Colloquialisms and slang

- Domain-specific language

- Low-resource languages

- Lack of research and development

Well, the advancement in NLP is still so amazing that sometimes you won’t be able to differentiate it, and also according to some claims google assistant or maybe google duplex I guess can now understand Irony and sarcasm, which is awesome isn’t it. Anyway, our researchers are doing their best.

Future of NLP

The goal of NLP is to help AI in understanding and communicating with us in our language smoothly, which we already are seeing advancement in. Apart from this NLP is doing many cool things like if you know about GPT3 which can generate human-like text, summarize large documents, complete sentences, write stories, and many things, also there are some AI that can literally synthesize music (future doesn’t seem safe for musicians). Well, there are many more cool things you will find out if you do a little bit of research.

Okay so, that was all about NLP, hope you enjoyed this article, if you did please let me know in the comment section below 😀

Data Scientist with 3+ years of experience in building data-intensive applications in diverse industries. Proficient in predictive modeling, computer vision, natural language processing, data visualization etc. Aside from being a data scientist, I am also a blogger and photographer.

-

Aman Kumarhttps://buggyprogrammer.com/author/buggy5454/

-

Aman Kumarhttps://buggyprogrammer.com/author/buggy5454/

-

Aman Kumarhttps://buggyprogrammer.com/author/buggy5454/

-

Aman Kumarhttps://buggyprogrammer.com/author/buggy5454/