Introduction

Feature Selection is one of the core concepts in machine learning which hugely impacts the performance of your model. The data features that you use to train your machine learning models have a huge influence on the performance you can achieve. Irrelevant or partially relevant features can negatively impact model performance.

Feature Selection is the process where you automatically or manually select features that contribute most to your prediction variable or output in which you are interested.

Overview

- What is Feature Selection?

- Types of Feature Selection

- Benefits of feature selection

- Conclusion

what is feature Selection?

it is a technique where we try to find out optimal features for our models. optimal features are those features that are relevant to the objective we want to achieve through our model

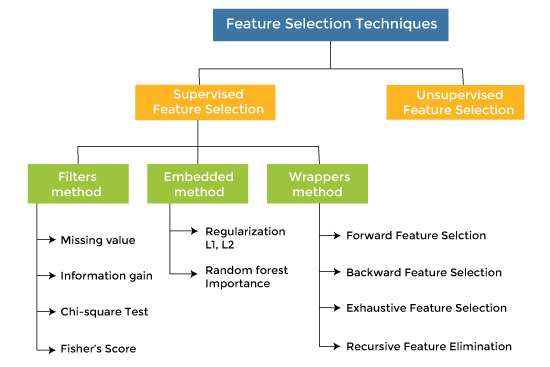

Types Of feature selection

Three types of feature selection are used most commonly. They are as follows

- Filter methods

- Wrapper methods

- Embedded method

Filter methods: –

These methods are generally used while doing the pre-processing step. These methods select features from the dataset irrespective of the use of any machine learning algorithm. In terms of computation, they are very fast and inexpensive and are very good for removing duplicated, correlated, redundant features but these methods do not remove multicollinearity.

The selection of features is evaluated individually which can sometimes help when features are in isolation (don’t have a dependency on other features) but will lag when a combination of features can lead to an increase in the overall performance of the model.

Types of Filter methods

- Information gain

- Chi-square test

- Fisher’s score

- Correlation coefficient

- Variance threshold

- Mean absolute difference

- Dispersion ratio

Information gain: Information gain determines the reduction in entropy while transforming the dataset. It can be used as a feature selection technique by calculating the information gain of each variable with respect to the target variable

Chi-square Test: Chi-square test is a technique to determine the relationship between the categorical variables. The chi-square value is calculated between each feature and the target variable, and the desired number of features with the best chi-square value is selected

Fisher’s Score: Fisher’s score is one of the popular supervised technique of features selection. It returns the rank of the variable on the fisher’s criteria in descending order. Then we can select the variables with a large fisher’s score.

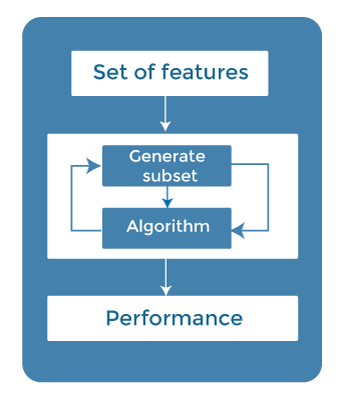

Wrapper methods: –Wrapper methods, also referred to as greedy algorithms train the algorithm by iteratively using a subset of features. Based on the conclusions made from training before the model, addition and removal of features take place.

Stopping criteria for selecting the best subset are usually pre-defined by the person training the model such as when the performance of the model decreases or a specific number of features has been achieved. The feature selection process is based on a specific machine learning algorithm that we are trying to fit on a given dataset.

The main advantage of wrapper methods over the filter methods is that they provide an optimal set of features for training the model, thus resulting in better accuracy than the filter methods but are computationally more expensive.

Types of a Wrapper method

- forward selection

- Backward elimination

- Exhaustive Feature Selection.

- Recursive feature elimination

- Bidirectional elimination

- Forward selection – Forward selection is an iterative process, which begins with an empty set of features. After each iteration, it keeps adding on a feature and evaluates the performance to check whether it is improving the performance or not. The process continues until the addition of a new variable/feature does not improve the performance of the model.

- Backward elimination – Backward elimination is also an iterative approach, but it is the opposite of forward selection. This technique begins the process by considering all the features and removing the least significant feature. This elimination process continues until removing the features does not improve the performance of the model.

- Exhaustive Feature Selection- Exhaustive feature selection is one of the best feature selection methods, which evaluates each feature set as brute-force. It means this method tries to make each possible combination of features and return the best performing feature set.

- Recursive Feature Elimination-Recursive feature elimination is a recursive greedy optimization approach, where features are selected by recursively taking a smaller and smaller subset of features. Now, an estimator is trained with each set of features, and the importance of each feature is determined using coef_attribute or through a feature_importances_attribute

Embedded method: –In embedded methods, the feature selection algorithm is blended as part of the learning algorithm, thus having its built-in feature selection methods. These methods encompass the benefits of both the wrapper and filter methods, by including interactions of features.

Embedded methods encounter the drawbacks of filter and wrapper methods and merge their advantages. Embedded methods are iterative in the sense that takes care of each iteration of the model training process and carefully extract those features which contribute the most to the training for a particular iteration.

These methods are faster like those of filter methods and more accurate than the filter methods and take into consideration a combination of features as well

Benefits of feature selection: –

- Reduces Overfitting: Less redundant data means less opportunity to make decisions based on noise.

- Improves Accuracy: Less misleading data means model accuracy improves.

- Reduces Training Time: fewer data points reduce algorithm complexity and algorithms train faster

Conclusion: – in this article, we have discussed different types of feature selection techniques and their way of working. feature selection techniques work to reduce overfitting and improve accuracy in the machine learning and deep learning models. It is better to use feature selection in our models to increase the performance of the models and to reduce errors. This is why the feature selection technique is better and is very important while building a machine learning model.

Data Scientist with 3+ years of experience in building data-intensive applications in diverse industries. Proficient in predictive modeling, computer vision, natural language processing, data visualization etc. Aside from being a data scientist, I am also a blogger and photographer.

-

Aman Kumarhttps://buggyprogrammer.com/author/buggy5454/

-

Aman Kumarhttps://buggyprogrammer.com/author/buggy5454/

-

Aman Kumarhttps://buggyprogrammer.com/author/buggy5454/

-

Aman Kumarhttps://buggyprogrammer.com/author/buggy5454/